Information Factory Algorithm Hosting

The Information Factory Algorithm Hosting Capability empowers value-adders to onboard custom algorithms that leverage the Information Factory ecosystem, offering seamless integration with its services, including the Data Lake, Dynamic Data Cube services, and other auxiliary resources.

The hosted algorithms can be executed on demand or on schedule via a headless execution framework, with full access to compute and storage capabilities, visualization tools, and data outputs.

Key Features

Integrated Data Access

Algorithms have direct access to:

The Information Factory Data Lake, a centralized repository for geospatial and environmental data.

The Dynamic Data Cube service, which provides structured access to satellite, soil, meteorological, and other data types, facilitating streamlined and efficient data ingestion.

Algorithm Execution and Monitoring

Algorithms onboarded into the system can be executed through HTTP-based APIs, which resemble a WPS 2.0 process definition:

Execution Start: Users specify input parameters and trigger the execution.

Progress Tracking: Visual progress feedback is provided, ensuring an interactive user experience even with backend limitations.

Results Retrieval: Outputs are stored in the Data Lake or accessible through predefined endpoints for further analysis.

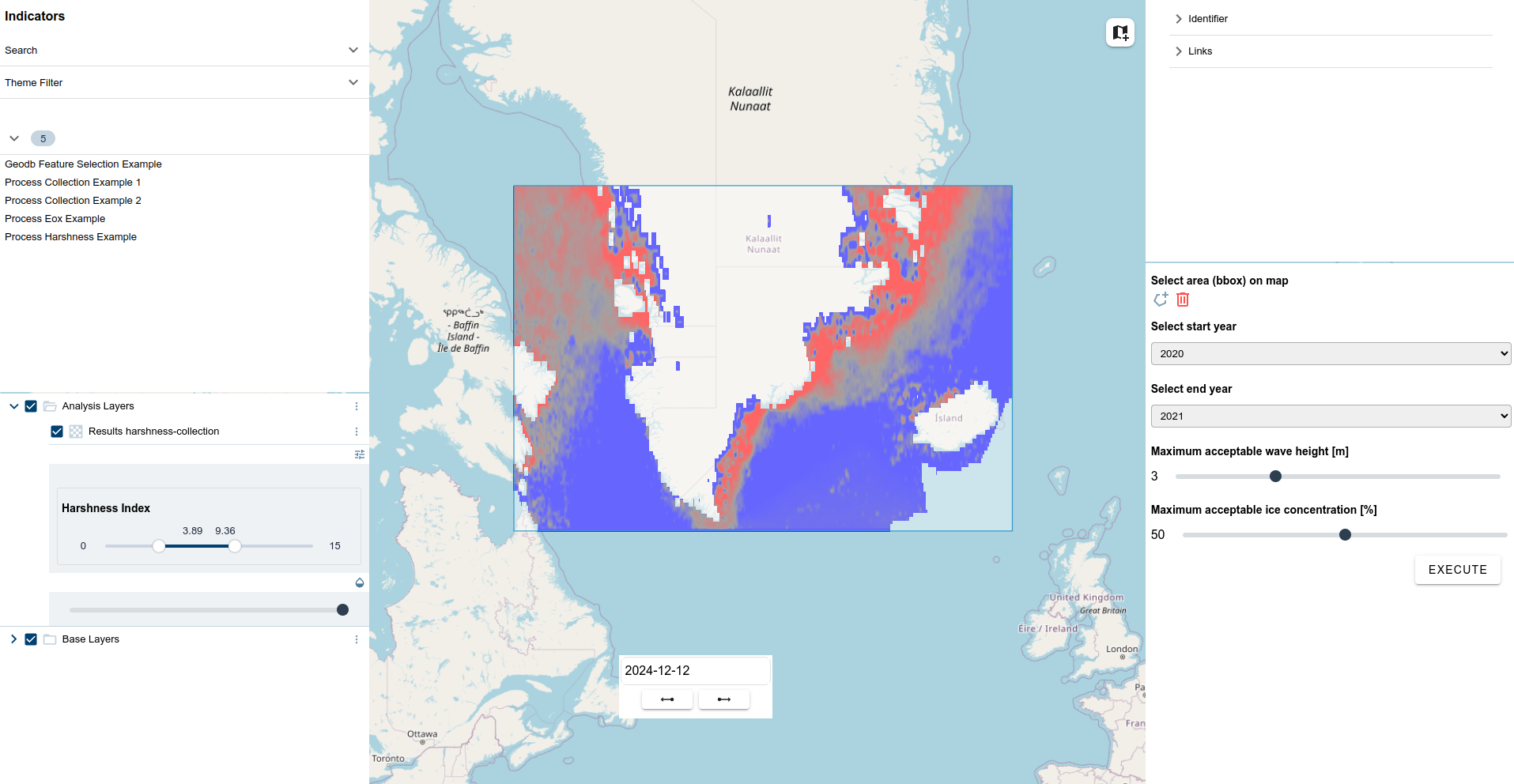

Example: Harshness Map

A harshness map process evaluates environmental conditions like wave height, sea ice concentration, and wind speed to generate a map that informs maritime activities and environmental assessments.

Example Input Parameters:

start_date(date): Start of the analysis period.end_date(date): End of the analysis period.region(geometry): Spatial region of interest.wave_height_threshold(number): Minimum wave height to consider (meters).resolution(object): Grid resolution (e.g.,{x: 0.5, y: 0.5}degrees).formula(string): Mathematical formula defining the calculation logic.

Flexibility in Onboarding Algorithms

Custom algorithms can be onboarded with the following minimal requirements:

Algorithms must be dockerized to ensure portable and consistent execution.

Input parameters should be passed to the Docker container, while results must be saved either locally (output folder) or directly uploaded to the Data Lake.

This approach supports any algorithm written in a way that meets these standards, ensuring versatility across diverse computational tasks.

Visualization and Analysis

The platform supports out-of-the-box visualization through integration with dashboards and anlysis tools of the eodash Earth Observation Ecosystem. Customized tools can also be hosted to meet specific user needs.

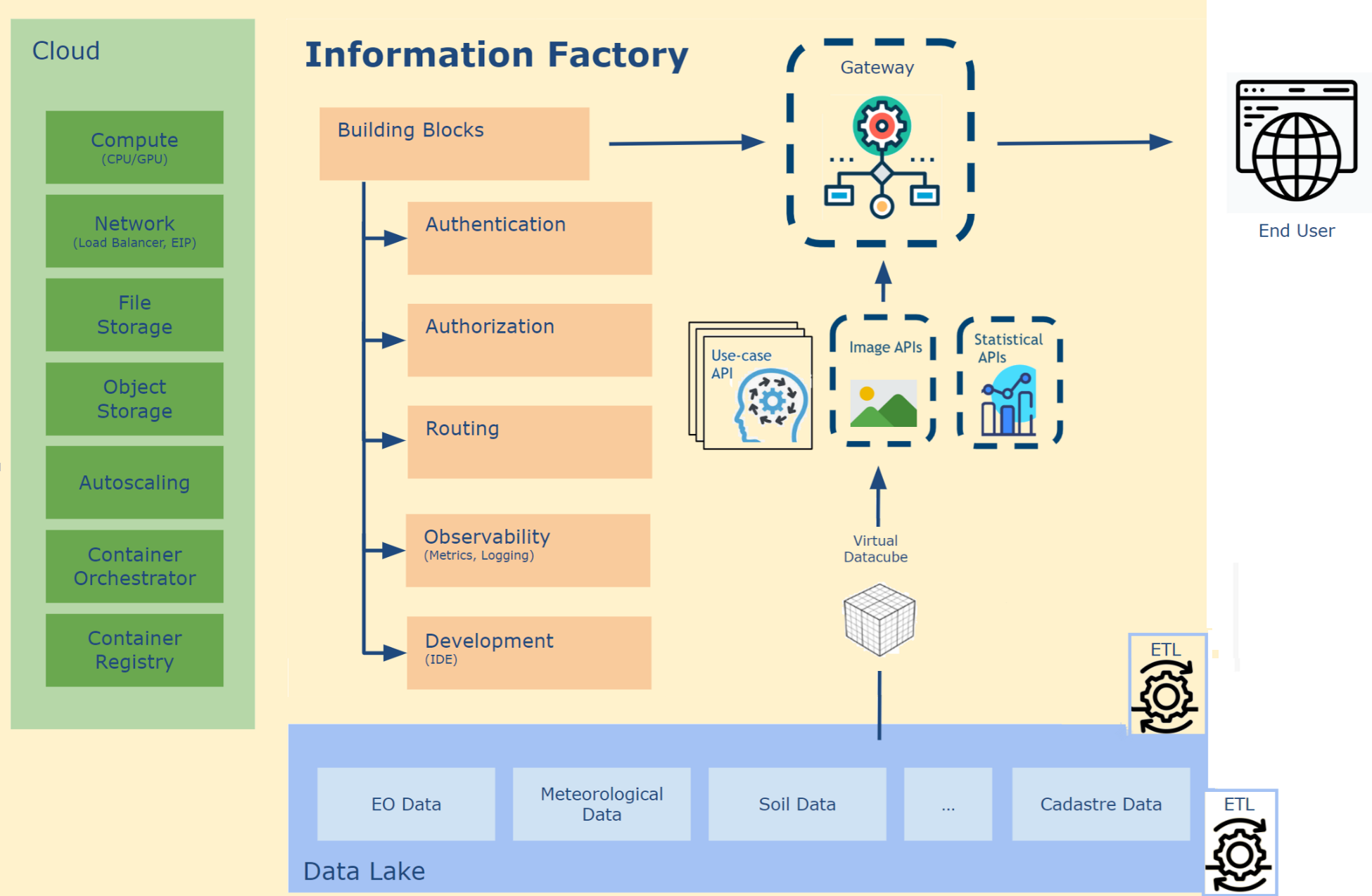

Architecture Overview

Algorithm Hosting: Algorithms are encapsulated in Docker containers.

Data Access: Processes integrate seamlessly with the Information Factory’s Data Lake and Dynamic Data Cube services.

Execution Framework: Algorithms can be triggered via API calls, with results logged and tracked through consistent interfaces.

Output Delivery: Results are stored in the Data Lake for visualization, further processing, or direct download.

Getting Started

Onboarding: Package your algorithm into a Docker container and define the input parameters and output data requirements.

Integration: Register the algorithm within the Information Factory ecosystem.

Execution: Use the headless execution framework to start, monitor, and retrieve results for your algorithm.

Visualize: Integrate outputs into dashboards or geospatial analysis tools to make actionable insights available.

Information Factory Value

By connecting to the Information Factory ecosystem:

Effortless Data Access: Directly utilize curated data sets from the Data Lake.

Dynamic Insights: Combine real-time or historical data via the Dynamic Data Cube for enriched analyses.

Scalable Infrastructure: Leverage cloud computing resources to run complex algorithms efficiently.